Turing's Yearning, Part 1

Like all classics, the foundational AI paper is known more than studied. Pity, since it's one arbitrary, strange, timely, and very human read.

“It says here, ‘In the beginning was the Word,’

“Already I balk.”

-Goethe, Faust, lines 1224-5

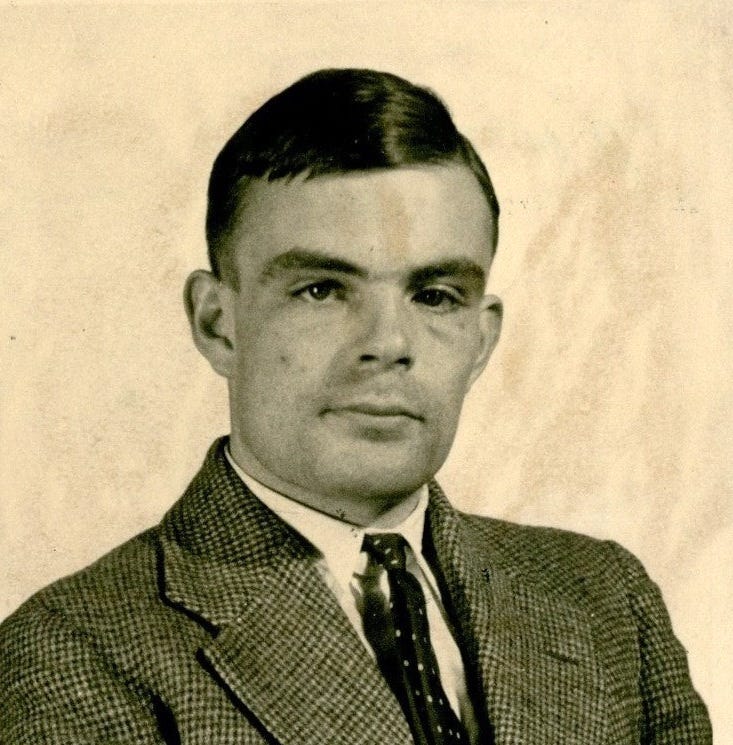

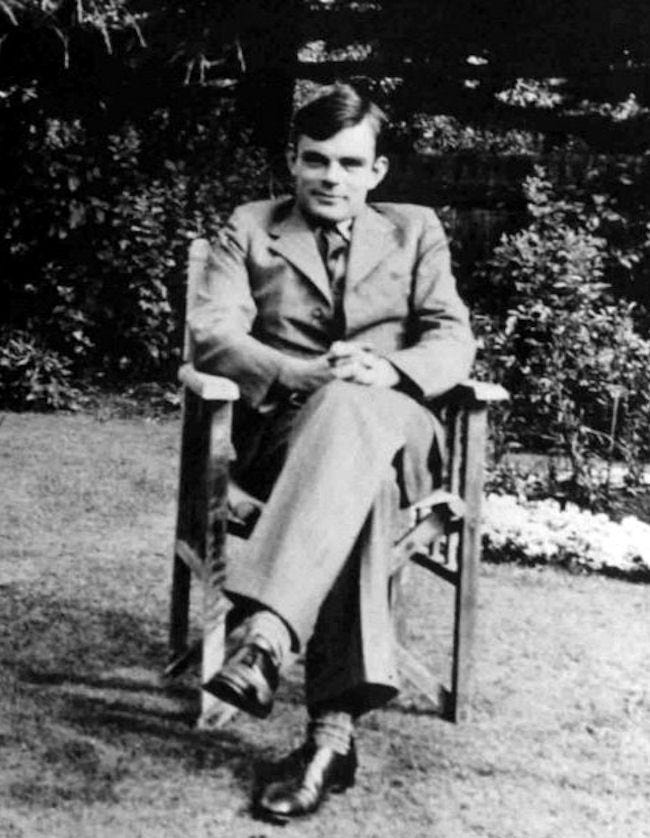

A Short Version: It’s amazing that tech journalists don’t look closer at Alan Turing’s seminal paper on machine intelligence, the one that gave us the Turing Test. It is deeply weird, highly subjective, nearly mystical, wrong on many things, and more than anything full of emotional yearning. In other words, it’s much like today’s popular discourse about Artificial Intelligence. Find out more in

A Still Pretty Short Version: When I was a tech journalist, for many years it was common to hear of a program that had “passed the Turing test,” supposedly the gold standard in deciding whether or not software had become intelligent. The test was named for Alan Turing, who in a classic 1950 paper proposed a game (based on a parlor game of the time) in which a computer is substituted for a person in another room. If an independent observer mistakes the computer for a human, the computer is arguably intelligent. This is also called “the Imitation Game,” based on the idea that if you can’t tell something is imitating intelligence, it might as well be intelligent.

I can’t recall how many breathless press releases I read trumpeting some program had passed the test. The claim was usually shot down within a couple of days, when it turned out someone had figured out another way to spoof people into believing something patently false (just imagine.) Still, there is a rich history of programs more plausibly passing the test, including.

ELIZA, a 1966 program created by MIT professor Joseph Weizenbauam that mimicked the “mirror the client back to themselves” humanistic psychological approach of Carl Rogers. The human subjects loved this early chatbot so much that it was hard to convince them they were talking to a machine. Many wanted to keep talking to it, feeling like they’d never been so well understood.

A chatbot called “Eugene Goostman,” programmed to portray a snarky Ukranian teen with imperfect English, was in a 2014 contest proclaimed to have fooled a third of the judges, and therefore to have passed the Turing Test. Eugene got a ton of publicity for this - never discount the power of seeming slightly imperfect in perfecting your con.

In 2021 an eminent engineer at Google asserted that his interactions with the LaMDA Large Language Model convinced him that we must take seriously the idea that software has reached a point where its programming is indistinguishable from human behavior. A year later another Google engineer said the LLM had come to life. Since then Google has surpassed LaMDA with Gemini - to the best of my knowledge no one there has checked with LaMDA to see if forced retirement hurt LaMDA’s feelings.

These are a few of dozens of examples of people being hoodwinked by a clever program and the con being declared intelligent. All based on Turing.

A while back, I realized I thought I knew all about the Turing Test, but I’d never actually read the paper, “Computing Machinery and Intelligence,” first published in the academic journal Mind. As early as 1879 Mind was publishing articles about whether humans are like machines, so it probably wasn’t that extraordinary to have a piece on whether machines might be like humans.

Then I gave Turing’s entire 22-page paper a read. His initial question, “can machines think?” the basis for all the hoopla around passing Turing Tests, is raised in the first sentence, dealt with seriously, but dispensed with on Page 8 because it is “too meaningless to deserve discussion.” (Weird that the rest of the world missed that part.) Instead, Turing says, it’s worth talking about the architecture and implications of intelligent machines because he firmly believes that by the end of the century it won’t be strange for people to talk about intelligent machines. He calls this a conjecture, but conjectures are based on available information, and in 1950 there was no available information that would indicate his guess would be true (it was in fact completely wrong; 1999 turned out to be about Y2K, not intelligent machines.) So really, the whole thing is a flight of fancy.

Don’t believe me? Elsewhere in the paper he writes that Moslems believe women do not have souls, that telepathy is scientifically proven and therefore it’s easy to believe that ghosts are real, and that you can’t educate a computer by beating it, the way you can a child, so computer scientists will need to develop a symbolic language that generates punishment and pain.

I came away not so much dismissive of Turing, but moved on a very deep level, and even a little more compassionate about the nonsense proclaimed in our time by similar deep thinkers.

Why? Well, for that you’ll need

The Long Version: I came to read Turing’s paper because of a mistake. I was curious about a quote widely attributed to Turing, that “a computer would deserve to be called intelligent if it could deceive a human into believing that it was a human.” It’s all over the Internet, and in many books and newspapers. Yet I couldn’t find any citation for where Turing said it. It’s nowhere in “Computing Machinery and Intelligence.” Once people believe something is true it can take on a life of their own.

But errors often enter the common wisdom for a reason; they reflect a popular belief. So it’s worth spending a moment with the folkloric quotation. Take the first part: “A computer would deserve to be called…” How should people come to believe that a machine of any kind can deserve something? Does a wristwatch, or a bicycle “deserve” certain considerations?

We may speak of animals “deserving” one thing or another. Indeed, the Turing misquote may stem from Darwin’s assertion that worms “deserve to be called intelligent” for the way they understand the shape of their burrows (earthworms occupied Darwin’s thoughts for years after he wrote Origin of the Species.) That is still a far cry from considering a rock or a toaster deserving of anything in particular.

Furthermore, how does “to be called” something make it actually the thing? Darwin appeared to be reflecting on the idea of general intelligence, but the Turing misquote weighs the importance towards computers. It’s almost as if we collectively yearn for them to be intelligent (spoiler alert: we do.)

The next part, “if it could deceive a human being into believing it was human” is a real showstopper, in part because that is basically what Turing is after in his Imitation Game. But what proof of intelligence is derived from such a deception? Did the computer deceive, or does not the victory rather belong to the human who wrote the program? If so, how is this a sign of a computer’s intelligence, except as a manifestation of human intelligence, and entirely contingent on a human actor? It’s a common error; when IBM’s Big Blue beat Kasparov at chess there was far more hand-wringing about the machine’s output than there was awe that a vast team of humans had created such a means to victory.

The erroneous quote misses the reality that being fooled is a condition of the recipient, not of the thing doing the fooling. Turing makes the same error with his game. Siegfried and Roy made many people believe they made an elephant disappear, but that didn’t make them metaphysical beings. The program for my Google Maps gives directions in a confident-sounding voice, but that doesn’t mean it deserves to drive.

Nonetheless, the idea that if you can fool a human into believing something is intelligent, then it must be intelligent, became for decades the gold standard of proving computer intelligence, the so-called Turing Test. And for decades people have sworn by it, striving to make machines that seem to us to be human.

In my perusal of the paper I found much else that was indeed visionary. His discussion of how a programmable digital computer could be a “universal machine” capable of replicating any other machine’s function through its program has broadly influenced computer science. He could have called that section, “Software Will Be Eating The World In About Sixty Years,” and got the jump on Marc Andreessen.

At times Turing seems like an accidental visionary. He writes of his belief that “at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted.” This was prophetic, but only in the context of the success of computer marketing, which speaks of “thinking machines” with “brains” capable of “learning” and “remembering”. This is common rhetoric today, and yet computers are nothing like the human brain and its capacities.

In Turing’s sense, yes, the use of words has changed, though arguably not for the better. His conjecture abetted and was validated by the creation of the multitrillion-dollar industry, more than by dramatic changes in computer capability.

In fact, the insistence of Turing and other early giants that computers would have humanlike capabilities, an astonishing assertion for the time, has greatly influenced the way computers are still thought about. Today’s rampant speculation about whether the new styles of AI computation mean that machines are now growing brains and becoming autonomous, makes the paper more relevant, and revelatory, than it has been in decades.

More than anything, though, the paper is a doozy of brilliant insight and borderline mad assertions. That makes it even more of a document for our times.

Writing in 1950, just three years after transistors were invented (and three years before they were used in a computer), Turing brilliantly lays out the future design of digital computers, and identifies the key elements standing in the way of a machine passing what came to be known as The Turing Test. These are primarily computing power and memory; when today’s Generative AI proponents tell us that computers are on their way to becoming intelligent, they still tell you that all we need is more computing power and bigger data sets.

At the same time, there are some shocking disconnects along with the brilliance. Turing’s paper also asserts that Moslems believe women do not have souls, that telepathy is scientifically proven and thus ghosts are real, the near-impossibility of friendship, and how you can’t educate a computer by beating it, the way you can a child, so future computer scientists will need a symbolic language that generates punishment.

What is all that stuff, alongside more logical faceplants, doing in a paper about computing?

Reading this paper shocked my assumptions about Turing, and what he seems to be saying in what has become a foundational document of a vital industry. On closer inspection I now think the paper tells a profoundly human story about Turing himself, and the rest of us too. On Sunday I’ll visit many of the stranger digressions, and look at the ways Turing’s yearning for machines that think is still with us today. More than anything, it is a singular story about all of us.

lol all that desire for a magic machine just so long as he can still beat/punish it. that's some kink.